A Scorecard Time Change

Throughout my many years of reviewing switches and writing all manner of content about them, one thing has managed to remain constant – criticism. Everyone is entitled to their own opinions and it somehow feels as if at least that many people also feel a similar sense of entitlement about sharing those opinions as well. Admittedly, I do think that I handle this fact of life in being a content creator pretty well… most days. As for those other days, I still maintain that those people I go off on had it coming. One of the reasons that I’ve been able to largely brush off some of the more baseless and maligned critiques of my reviews and how I score switches is that I sincerely and deeply feel that my reviews, scoring, and manner of breaking everything down has been incredibly consistent over the last 4-5 years of writing. Aside from this consistency being something that I personally strive to achieve for personal satisfaction, I believe one of the hallmarks of being a good reviewer of anything comes down to consistency. Anyone approaching your content for the first, fifteenth, or five hundredth time should always be able to come in with the same set of expectations of you, know exactly what to get from you and where to get it, and leave satisfied knowing that it’s the same answer you’d give them yesterday or tomorrow. That sort of warm, comforting consistency is how you build a trusting audience and I feel lucky enough to be in a position where I feel like I have one. So taking today as one of those days where I choose violence and rebuke the criticisms, let me go ahead and mathematically show to you all just how wrong you are and how consistent I am:

Figure 1: Overall scores of switches plotted as a function of their date reviewed.

Figure 2: Hard scores of switches plotted as a function of their date reviewed.

Figure 3: Soft scores of switches plotted as a function of their date reviewed.

Honestly, I’m even more consistent by the numbers than I could have ever blindly hoped for and there’s not even an ounce of scoring creep in my reviews since I first started. However, upon looking a bit closer at that last graph, perhaps maybe a few of you might have had a tiny point of somewhat fair criticism about how I’ve been scoring switches. As can be seen in my plot of ‘soft’ scores over time, the trend is that switches are ever so slightly getting worse on these metrics as the years go on. To put it as bluntly as possible, it doesn’t make sense that these soft scores – those that focus on contextual points such as price, availability, community awareness, legacy, innovation, and uniqueness – are somehow getting worse. Since I started scoring switches back in 2020, nearly all of these things have clearly improved for the nearly the entire switch market across the board. Today in 2024, there are better performing switches at lower prices that are more innovative or widely available than almost anything that existed back in those days. Hell, just the last year of fancy new switch designs alone proves this to be true. And yet somehow, clearly my context-based scores do not seem to be adjusting with the times quite like I had expected. While I think this isn’t the worst thing, as it means that the next switch I publish a glowing review of must really have gone that extra mile above the rest since I’m clearly getting jaded as a reviewer, I recognize that this clearly isn’t satisfying to everyone out there. The reality is that the context surrounding switches exists within time and my context scores currently are attempting to exist outside of it. Thus, I must admit there is a fundamental problem with these scores:

The ‘soft’ portion of my switch scores that cover context and other implicit features associated with them is not working as it is not being resolved in time.

Well, that should be easy enough to fix, right? All that I need to do is make sure that I’m consistently and constantly updating the soft portion of the scores for all of my switches on a revolving, regular basis. By rescoring each one of my scorecards on a semi-regular basis while continuing to add to them and the other content I’m doing, everything will be as up to date and as accurate as possible at all points in time when someone might be tempted to read such. Unfortunately, the thousands of pages of documentation piled up that I’m currently adding to each and every day after my full time 9-to-5 simply does not afford me the space nor time to do such a thing. In fact, I’d have to drop almost all new content altogether to just keep recirculating and re-scoring these switches over and over as the ever evolving switch market continues to change. As well, I cannot (and will not) choose to just arbitrarily adjust, move, or change the scores of only some switches as they ‘fall out of the times’, as that would inflect even more bias than is already there in a subjective scoring system as such. So, after a couple of months of batting around ideas, I’ve come to only one way to passively, uniformly, and consistently adjust the soft scores of all switches past, present, and future to account for time:

I’m going to introduce a ‘time decay’ into the scoring system.

While this sounds like a vaguely threatening math concept to introduce into little ol’ switch scoring, this actually is quite a simple and elegant fix to the problem at hand. Since I am demonstrably unable to properly adjust my own frame of context to the changing times around me and yet am able to remain rather constant in my interpretations over the years, I’ll just weight the scores to adjust my opinions to better match reality in time. By weighting the soft scores of reviewed switches such that they decrease with time, it’ll always mean that the average switch being scored today in better, more accessible times will have a soft score that is on average higher than older switches which came from worse times before it. While I recognize that this may be a bit concerning with respect to the narrow case of me reviewing switches with legacies that shouldn’t and wouldn’t get worse with time, such as Cherry MX Browns, I have come to realize that I’m just simply not reviewing switches of such a caliber as frequently these days. Anymore, there’s so many new, unique, and compelling switches out there for me to chase down and document at length that it’s very likely that my scoring moving forward will continue to focus solely on these switches at the bleeding edge. (That’s also why my Background sections for normal, full length reviews are slimming down on their history and increasing on their contextual relevance day by day.) So as for this crazy ‘time decay’ math concept that I’ve cooked up, I hope you’re ready to struggle through some advanced math to get on the same page as me. Years of extensive math training, up to and including graduate level applied mathematics courses have been poured into this system and not easily, at that. After extensive testing, the formula I’ve come up with is: A 1.0 point decay per year. Trust me on this one, it actually works to correct for this issue so much more than you would have ever guessed:

Figure 4: Overall scores of switches plotted as a function of their date reviewed with the time decay function applied.

Figure 5: Soft scores of switches plotted as a function of their date reviewed with the time decay function applied.

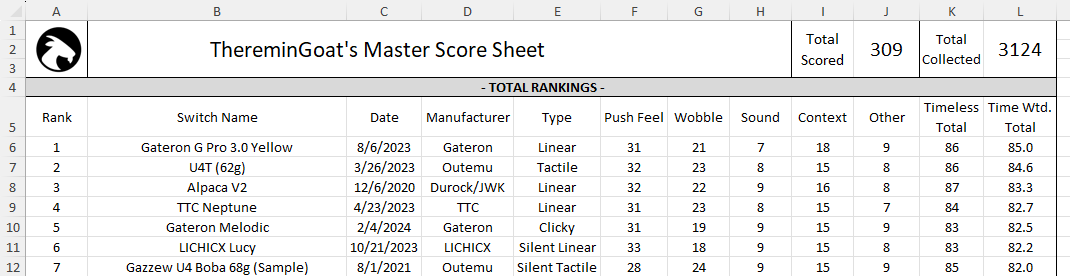

As I hope you can see, by introducing a 1.0 point decay per year on switches that were scored, the trends in both ‘soft’ and overall switch scores seem to correct quite nicely into a direction that seems to comport with reality a bit better. On both of these graphs, as we move from 2020 to 2024, switches on average appear to be getting better, more available, and at a better price per performance. Now I’m sure this immediately drums up more questions in your head about the implementation of this change, what it will look like, and how I’m going to go about doing the first overhaul to switch scoring since it was first introduced. In all reality, I think that this plugs quite nicely into the review structure and documentation I already have, and so it will be rolled out immediately as of time of publishing this short article. As for its implementation, these are the changes you will see to the Composite Score Sheet, Soft Score Sheet, and full length reviews moving forward:

Figure 6: Composite Score Sheet changes in reviews moving forward.

‘Composite’ and ‘Soft’ Score Sheet Changes

- Switches now have their date of last scoring listed next to them in column C which will be used to determine their age for their ‘Time Weighted Total Score’

This takes affect on the ‘Overall Total’ and ‘Soft Total’ tabs of the Composite Sheet

- The original ‘Total’ score has been readjusted to ‘Timeless Total’

- Switches now have a ‘Time Weighted Total Score’ which is their Timeless Total Score minus 1.0 points per year since they were scored

This score will go out to the tenths place as keeping it on a whole number scale caused too many ties in the overall ranking system

- Switches will now be ranked in these sheets based on their ‘Time Weighted Total Scores’

While this may feel a bit weird, consider how this adjustment changes the rankings of switches I’ve scored thus far. I think that you’ll probably enjoy this.

Review and Scorecard Changes

- Moving forward, the ‘Stat Card’ at the end of the review which reports a switch’s score relative to others will be based on ‘Time Weighted Total Score’ rather than the original ‘Timeless Total’

- Scorecards will only have their ‘Timeless Total’ on the chart in their respective score sheet and will not be updated with ‘Time Weighted Total Scores’

Not only does this preserve each scorecard in the time in which it was generated like all of the older reviews on the website, it also prevents me from having to do redundant work.

Figure 7: Top 10 switches to date as ranked as a function of their 'Timeless Total' (Old) and 'Time Weighted Total' (New) scores.

Final Conclusions

All in all, I have a feeling that many of you all will look at this decision and immediately feel that it addresses some of your concerns with respect to my reviews and switch scoring thus far. Not only has a change like this been something that has been asked for many times over in the past year or so, but it has been something that I’ve been trying to think about fixing, myself. While the implementation and execution of this is quite simple, know that this took a lot of dedicated consideration and thought on my end to ultimately pull this trigger. I care a lot about my content and I put a lot of effort into reviewing and scoring switches the best that I can. A decision like this, which isn’t reversible, can and will help augment my content in such a way as to keep it fresh and relevant to the times. In the event that I ever think a switch has truly skipped too low into the rankings, I can always thoroughly and accurately re-review and assess it to give it a new, corrected score and move it back to a more accurate lighting. However, this system affords me the opportunity to not have to go back and review every switch. I really try my hardest to be the best, most consistent, and most accurate switch reviewer that I can be for the benefit of you all who read my work and have supported me over the years, but even I can’t overcome my own internal biases so easily. Instead, I’ve chosen the much more easy way out of personal change by instead beating it down with math. I hope you all see this as an improvement moving forward.